Teaching Machines to Read Hands

How do you teach a computer to understand American Sign Language? That was the challenge my teammate and I set out to solve. Using the MNIST ASL dataset, we focused on static hand signs—letters and numbers—as the foundation for building a machine learning model that could one day bridge communication between the Deaf community and technology. The project became our testbed for comparing different neural network architectures and uncovering what it really takes for a computer to “see” a hand sign.

Building and Training the Models

We implemented three different architectures: a Simple Neural Network, a Convolutional Neural Network (CNN), and a CNN enhanced with Batch Normalization. To make our models robust, I coded a preprocessing and augmentation pipeline in PyTorch—adding random crops, rotations, and color jitter to simulate real-world variability.

Every model was trained under identical conditions, letting us fairly compare their strengths and weaknesses. It was a process of iteration and experimentation, where each run revealed new insights into what worked and what didn’t.

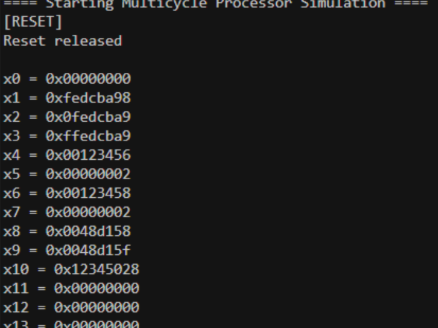

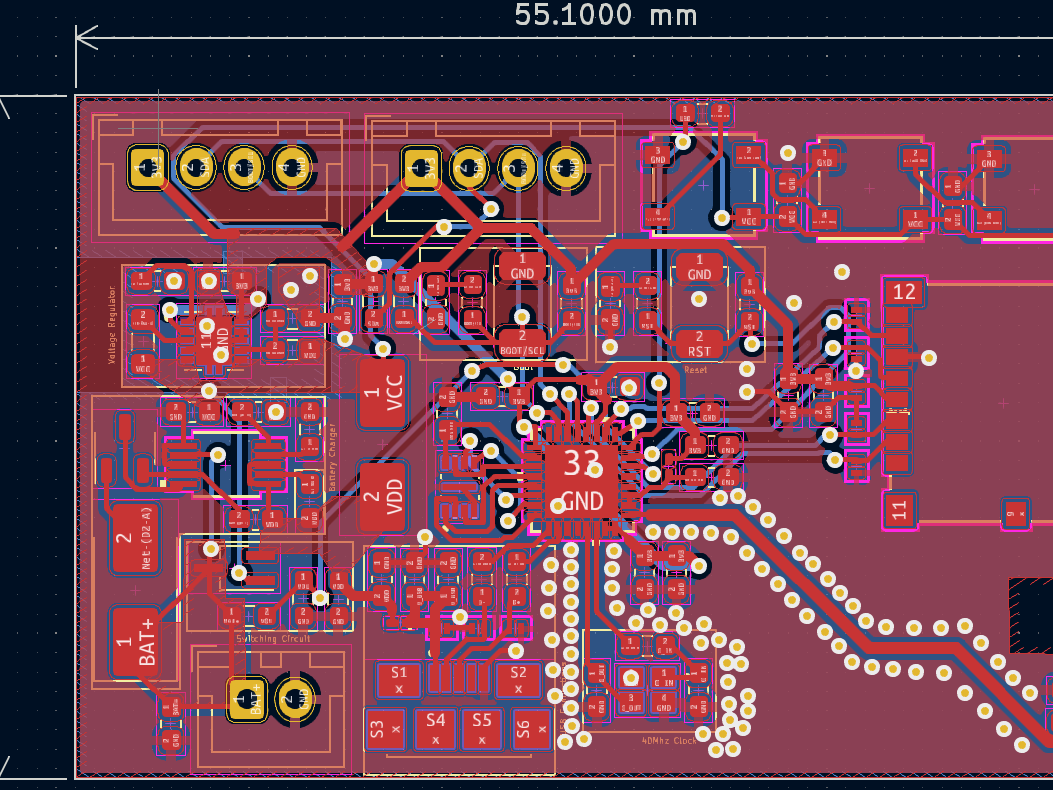

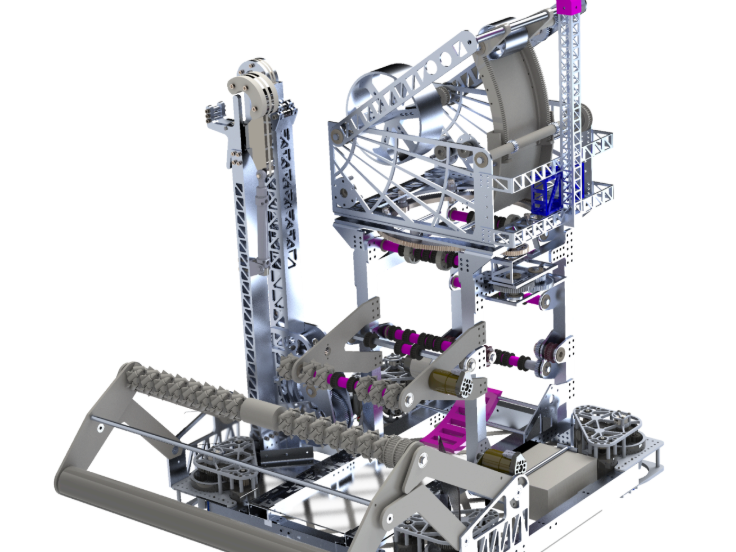

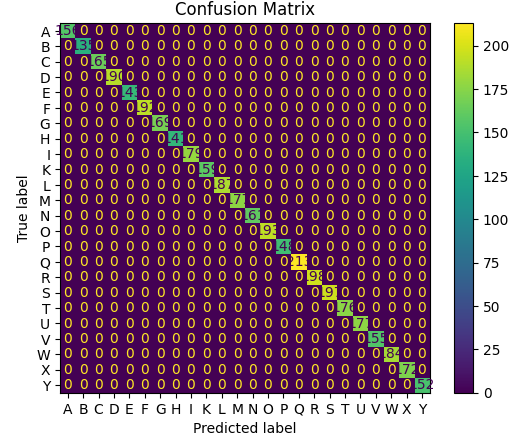

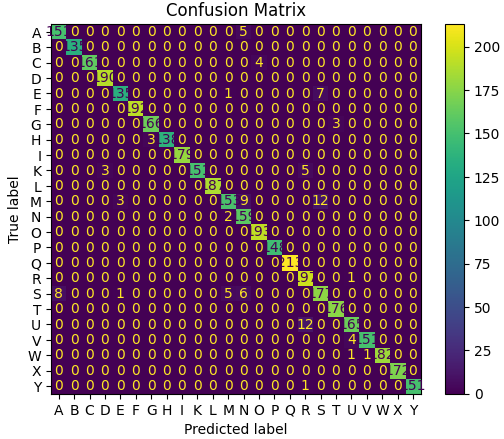

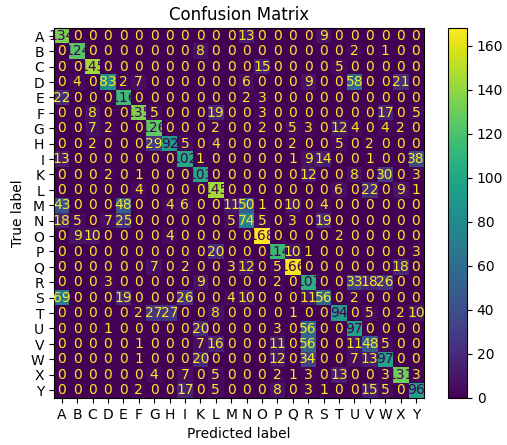

Confusion Matrices For Different Models:

CNN with Batch Normalization

CNN

SimpleNN

Results and Impact

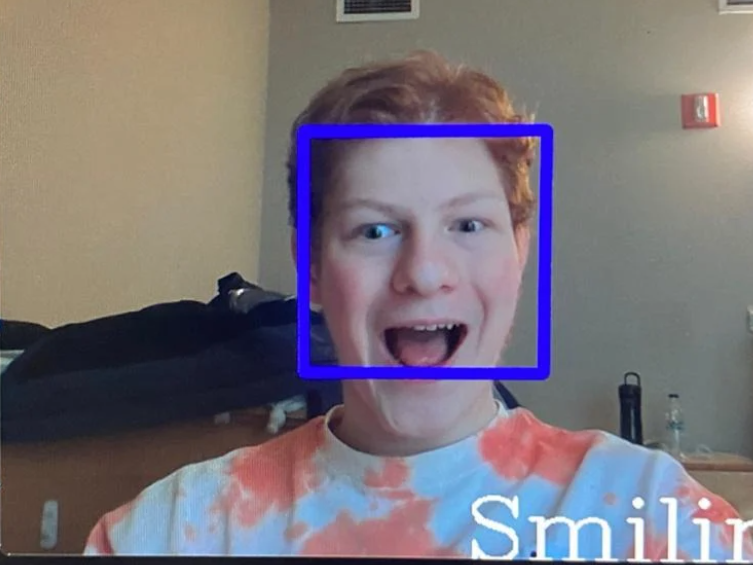

The results spoke for themselves: while the SimpleNN struggled, CNNs—especially with Batch Normalization—achieved near-perfect recognition, with our best model hitting 100% accuracy on the test set. I analyzed learning curves, confusion matrices, and validation metrics to validate performance and understand edge cases. Beyond static testing, we extended the system to run in real-time on webcam input, turning the project into a prototype for practical ASL recognition. What began as an experiment in comparing architectures grew into a powerful proof-of-concept: machine learning can indeed learn to read hands.